If you want to have an analytical approach to your data, you have of course been faced with the difficulty of using character strings. So much so that very often you have had to give up or even put them aside. Lack of tools? complexity of managing complex semantics? in short, strings are often the cause of great frustration for analysts and other data scientists.

Let’s take a quick brief about this.

Index

Did you say Text ?

While it is rather easy to process numeric data as I said in the preamble, character strings are more complex to work with. A first reason is that it is difficult to put them into an equation. But the real complexity in the treatment of character strings lies above all in the semantic analysis that we will be able to bring to it. Indeed, if each sentence has a meaning, the latter can only be deduced after analyzing each word. A sentence is therefore a sort of recipe in which each ingredient has its dosage, its place and an order which must be precise.

Fortunately, in what I mentioned above I am only talking about purely textual data. Why fortunately? and quite simply because we will not always be dealing with this type of data. We can easily agree on 3 main types of character string data:

- Categorical data

- Structured chains

- Free texts

We just briefly talked about free texts, now let’s see the other types of channels.

Categorical data

Categorical data is also often referred to as list data. As the name suggests, this is data based on lists of values. The classic example is the concept of color or sex. You fill out an input form and the information in the categorical data field is then a drop-down list imposing a value on you in a defined list.

Of course, it is not always easy to work with categorical lists, because very often we do not know the list of values. Worse, if you are working on a Machine Learning algorithm, you will have to be careful that the field of possible values of the training data set is the same as that of the test set! to do this, a data analysis (profiling) is necessary in order to discover this famous list of values that make up our categorical data. Following this analysis and by constantly distributing a list of defined values, you will be able to conclude that a variable is categorical or not!

If you are doing machine learning all you have to do is process your categorical variable with an appropriate one-hot encoding . If you are in an integration project, a simple lookup will certainly suffice, for example to check the consistency of this data.

These categorical data can also undergo so-called data quality pre-processing. Indeed, imagine for example that your color (brown) was incorrectly entered (for example brown with only one r). Its meaning must remain the same but if you do nothing you will have two categories: brown and brown. It is therefore essential to transcode your brown in brown beforehand in order to keep all its meaning.

Structured chains

Structured chains are often the easiest to process. This is totally true when we know the structure such as XML, JSON, addresses (AFNOR), phone number, etc.

But it remains more complex as soon as we do not really know the structure! Here again, profiling can help to discover the structure. But often this will not be enough and will require a more in-depth manual analysis.

In many cases, it will be necessary to use specialized tools, such as address validation tools.

The texts

I’m finally getting there: textual data! This is where we usually get stuck and where the frustration of not being able to use information is felt. Why ? and quite simply because a sentence has a meaning, a semantics and a simple analysis by taxonomy will not always be sufficient. We will therefore have to treat these damn chains with a different approach.

We then speak of Text Mining or text mining.

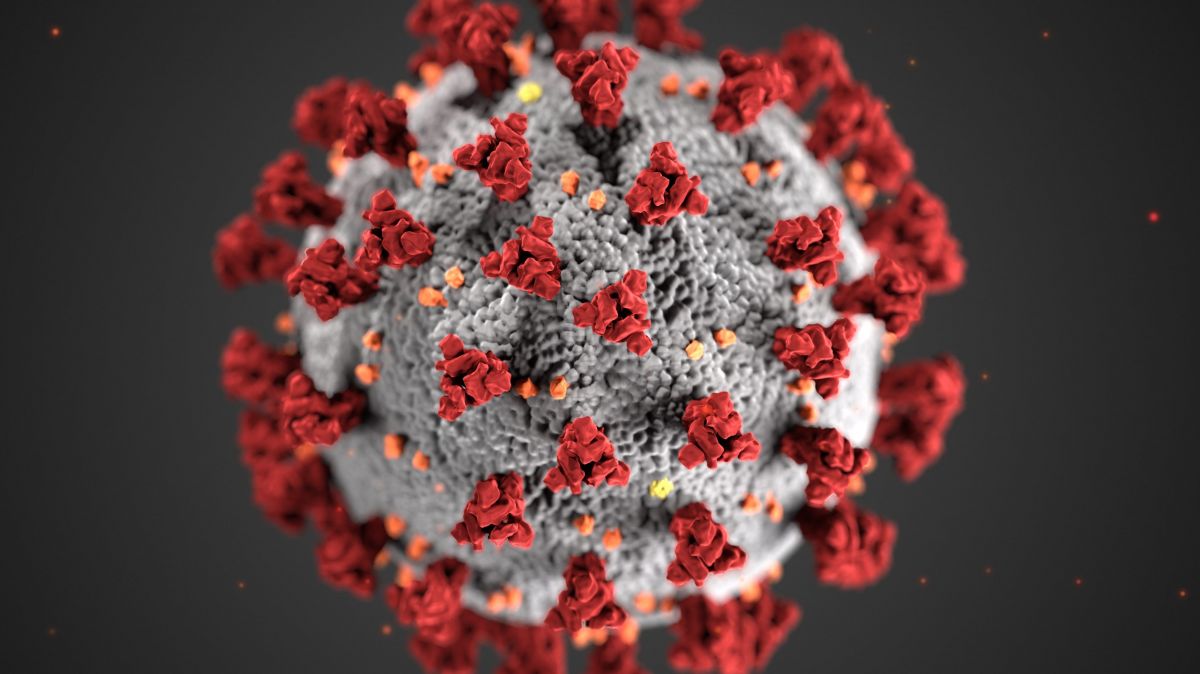

Text Mining is a very important part of another very popular discipline: Artificial Intelligence. If we take the definition of Wikipedia : “It designates a set of computer processing consisting in extracting knowledge according to a criterion of novelty or similarity in texts produced by humans for humans. In practice, this amounts to putting into an algorithm a simplified model of linguistic theories in computer systems for learning and statistics. ”

In general, there are two main stages in text mining: the decomposition and the analysis itself. The decomposition allows to recognize the phonemes, the sentences, the overall grammatical role, the taxonomy, etc. In short, the idea is to intelligently divide the text and to find in this division the place and role of each grammatical element.

The second step is more complex because it involves finely analyzing the sentences, separating or even isolating the sets that have meaning in order to take advantage of them.

I will stop there for this article but then I will offer you new posts allowing a gradual approach to text analysis. Starting with the analysis by bag of words then going to NLP (Natural Language Processing) .